📄 What’s the Matter

In an era dominated by massive funding rounds, corporate AI arms races, and billion-parameter models, two undergraduate students have disrupted the tech world with a groundbreaking innovation. Meet Dia, an ✨ open source AI text-to-speech (TTS) model ✨ created by Korean startup Nari Labs. This AI marvel isn’t just another voice synthesis tool—it’s a serious contender that rivals top commercial offerings like ElevenLabs and Sesame CSM-1B, despite being built with ❌ zero funding. With 😍 emotional expression, 👥 speaker variation, and 🪗 non-verbal sound simulation, Dia exemplifies what passion, talent, and accessible tech can achieve.

In this article, we take a deep dive into how Dia came to be, how it works, why it matters, and what it tells us about the future of 🔗 open source AI.

👤Who Built Dia? The Story Behind Nari Labs

Nari Labs was founded by Toby Kim and a fellow undergraduate student, both enrolled in South Korean universities. What sets their story apart isn’t just their youth, but the fact that they built Dia—a state-of-the-art voice model—without external funding, venture capital, or even institutional backing.

📊 Inspired by Google’s NotebookLM and utilizing resources from the TPU Research Cloud program, they harnessed free computational resources to build and train their model. The duo’s success is a powerful testament to Sam Altman’s famous tweet: “You can just do things.”

🌟 What is Dia? Features That Stand Out

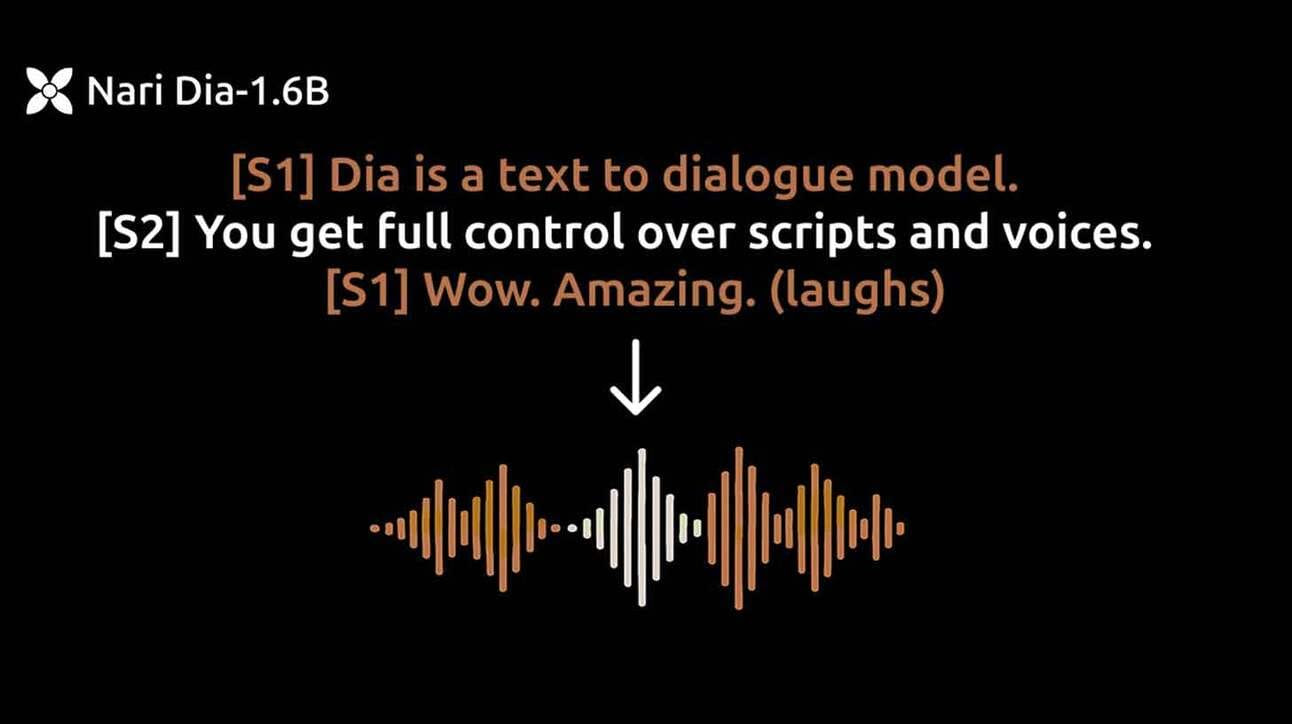

Unlike most open source voice models, Dia is anything but basic. The model boasts:

- ⚖️ 1.6 billion parameters

- 😃 Support for multiple emotional tones (e.g., happy, sad, angry)

- Recognition of non-verbal sounds like laughter, coughing, and even sighs

- 👥 Multiple speaker tags for dialogue differentiation

- ⏳ Timing and pacing adjustments to mimic natural conversation

This level of nuance is rare even in premium, paid AI voice models, making Dia an extraordinary achievement in the open source realm.

🔗 Open Source AI and Its Rising Influence

The rise of Dia signals a broader shift in the AI ecosystem: the increasing power and influence of 🔗 open source AI. While companies like OpenAI and ElevenLabs guard their tech behind paywalls and proprietary systems, projects like Dia invite global collaboration and innovation.

✅ Benefits of open source AI include:

- 🔒 Transparency: Anyone can audit, modify, or improve the code.

- 🌐 Accessibility: Developers and creators worldwide can use it freely.

- ✊ Collaboration: Researchers can contribute to and iterate on the base model.

- 💼 Education: Students and self-learners can explore real-world models without institutional gatekeeping.

Dia stands as a shining example of how open source principles can yield commercial-grade results.

🏋️♂️ Dia vs. ElevenLabs: A Genuine Alternative

When we talk about text-to-speech excellence, ElevenLabs often leads the conversation. However, side-by-side comparisons reveal that Dia holds its own:

| 🔹 Feature | 🚀 Dia | 📈 ElevenLabs Studio |

|---|---|---|

| 😍 Emotional Expression | Yes | Yes |

| 😒 Non-Verbal Sounds | Yes | Limited |

| 🔗 Open Source | Yes | No |

| 💰 Price | Free | Paid |

| 👥 Multi-Speaker Tags | Yes | Yes |

| ⏳ Timing Control | Yes | Yes |

| 🔧 Community Customization | Yes | No |

🎉 Dia offers similar—if not superior—features in a free, open source package. For startups, educators, or content creators on a budget, Dia is a compelling ElevenLabs alternative AI.

🚀 The Technology Behind Dia

Dia‘s architecture hasn’t been fully disclosed, but it likely builds upon transformer-based language and audio processing models. The training involved large datasets of multilingual speech, annotated with emotion and speaker metadata.

🚗 The use of Google TPU Research Cloud was pivotal. TPUs (Tensor Processing Units) offer significantly faster model training compared to traditional GPUs, enabling the Nari Labs team to iterate quickly and cost-effectively.

Moreover, their open source documentation reveals:

- 🔄 Modular design for ease of customization

- 📊 Scalable training pipeline for continuous learning

- ✏️ Fine-tuning instructions for adapting the voice to specific applications

🔊 Use Cases: Why Dia Matters

The potential applications of Dia are vast:

- 🎧 Content creation: YouTubers, podcasters, and streamers can create expressive audio without hiring voice actors.

- 🏫 Education: Teachers and trainers can develop multilingual, emotionally resonant lessons.

- ♿️ Accessibility: People with disabilities can use it for customized speech assistance.

- 🎮 Entertainment: Game developers can generate rich, character-specific dialogue.

Because it’s open source, Dia also enables experimentation. Developers can fine-tune it for niche applications like storytelling, audiobook narration, or even therapy tools.

⚠️ Challenges and Ethical Considerations

No AI innovation is without its challenges. With Dia being open source, it also becomes vulnerable to misuse:

- 🕷️ Deepfakes: It can be misused to impersonate voices.

- 🚨 Misinformation: Fake audio clips can be generated and used maliciously.

- 🧵 Attribution: Who’s responsible if the model is used unethically?

To mitigate these risks, Nari Labs has issued usage guidelines and encourages watermarking or audio tagging features. However, it also underscores the need for AI regulation in the open source space.

🚀 The Bigger Picture: Why Dia is a Wake-Up Call for Big Tech

Big tech companies often monopolize AI innovation by locking features behind subscriptions or APIs. Dia disrupts this model, proving that excellent AI can emerge from non-traditional players.

This has implications for:

- 🌟 Startups: Innovation doesn’t need VC backing.

- 🏫 Education: Students can lead, not just follow.

- 🌎 Policy: Governments must rethink AI funding and open access.

Moreover, it proves that open source AI isn’t just a fringe movement—it’s becoming a legitimate pathway to cutting-edge tech.

🎡 What’s Next for Nari Labs?

Nari Labs plans to launch a consumer-facing app centered around Dia for content creation and voice remixing. Think TikTok meets Siri—with AI-generated voices adding a new layer of personalization.

💡 The founders also aim to expand Dia’s capabilities:

- 🌍 Add support for more languages

- 😊 Improve emotional range and subtlety

- ⏱️ Enhance latency for real-time use cases Open Source AI Open Source AI Open Source AI Open Source AI Open Source AI

As they gather more feedback from the open source community, Dia will likely evolve into a full ecosystem—powering everything from education to entertainment.

🌈 Conclusion: A New Era for AI Innovation

Dia isn’t just an AI model—it’s a movement. Built by two undergraduate students with ❌ zero funding, it challenges the monopoly of commercial AI and champions the power of 🔗 open source. With advanced emotional expression, non-verbal sound capabilities, and an open invitation for global collaboration, Dia is more than a tech story—it’s a symbol of what’s possible when curiosity meets code.

🥇 In the ever-competitive world of artificial intelligence, Dia offers a powerful reminder: the future doesn’t belong only to the biggest companies—it belongs to the boldest creators. Open Source AI Open Source AI Open Source AI Open Source AI Open Source AI

Read One more Great Blog : The AI Revolution: Nvidia GTC 2025 Unveils the Future of Technology